| During the last steps, voice recording, speech-to-text and text-to-speech feasibility was studied. Now enters another difficicult part: the brain ! |  |

The last steps implied the use of external services for voice-recognition and text-to-speech capabilities.

When it comes to Jarvis’ brain, the idea is twofold:

- Onboard answer engine: part of the analysis will be done onboard with simple regular expressions (as it was seen on the “proof of concept” step).

- External answer engine: the other part of the analysis is triggered whenever the first one fails. More than a fallback engine, this service should enrich the answer as much as possible.

Wolfram|Alpha is a computational knowledge engine. It’s an online service that answers factual queries directly by computing the answer in terms of structured data, rather than providing a list of documents or web pages that might contain the answer as a search engine would do. It’s a pretty good candidate for Jarvis.

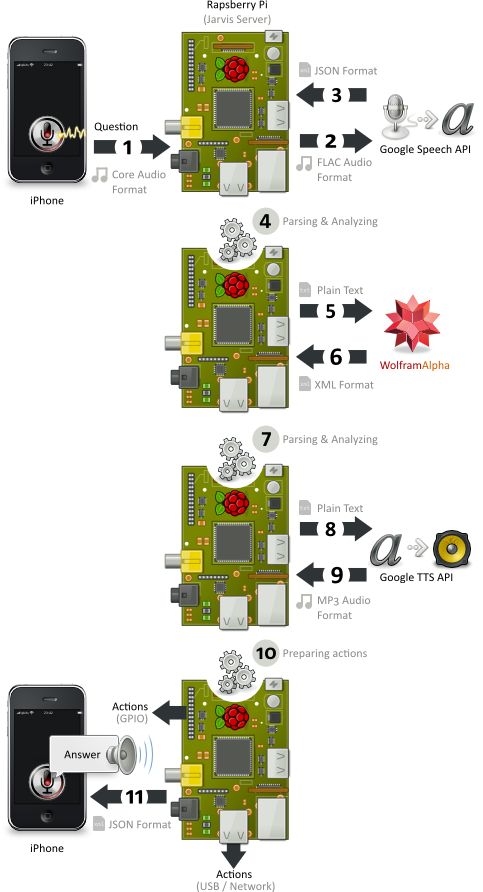

Jarvis’ Workflow

This simplified illustration describes Jarvis’ workflow, including voice acquisition, external speech-to-text, parsing & analyzing (including Wolfram|Alpha service), external text-to-speech and actions:

Wolfram|Alpha

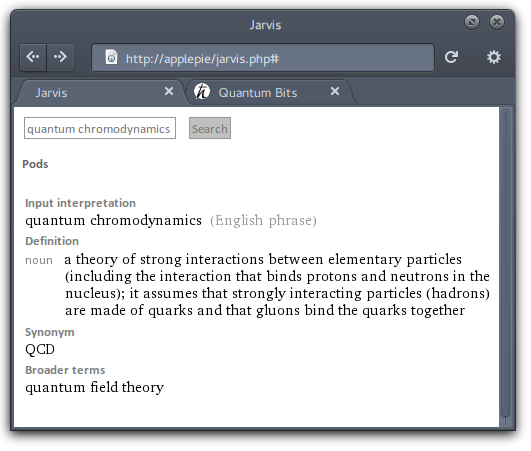

The Wolfram|Alpha API provides a web-based API which allows clients to submit free-form queries. The API is implemented in a standard REST protocol using HTTP GET requests. Each result is returned as a descriptive XML structure wrapping the requested content format.

Roughtly speaking, these results are divided into sections: assumptions and pods.

The

The

The web-service API can be accessed from any language that supports web requests and XML. Furthermore, the Wolfram|Alpha community provides a set of bindings for Ruby, Perl, Python, PHP, .Net (juk!) Java (and Mathematica, of course). 😎

I created an account on Wolfram|Alpha and applied for an AppID (which I received right away). I free account allows up to 2000 queries a month, which should plenty.

I downloaded the PHP binding to my Raspberry Pi, read the documentation (no, I didn’t, I’m kidding. Who reads manuals ???), and in less than 100 lines of code I got this running:

It’s a first try, but it looks promising. Jarvis may have soon a brain.

Super comme projet. J’en ai un similaire, dispo sur mon site. Une IA en python dont la partie cerveau tourne sur un raspberry pi.

J’ai un client Android et un en ligne de commande. Les deux ont de la reconnaissance vocale et le TTS grâce à Google.

Avec les GPIO du raspberry je controle la lumière.

Bon courage pour la suite de votre projet. Moi je vais regarder du côté de motion et opencv pour rajouter la “vue” à mon IA.