| Adding Siri to both my old iPad 1 and iPhone 4 was a failure 🙁 Jailbreaking went nice, but messing up with SiriPort was a complete disaster, and it took me nearly 2 hours to turn back these devices into something different than a brick. |

|

And thus … no SiriProxy for me. But then again, why should I mess with existing closed-source crap, when I can build my own stuff ? Hum ?

Project “Jarvis”

Here comes Project “Jarvis“. Ok, the name sucks… I shouldn’t watch these Marvel movies. And the logo is no more than a copy of Siri’s own logo, with a touch of Raspberry color. I’ll work on these later: now, it is time to proof check the ideas behind this project.

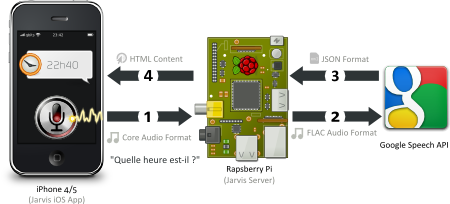

The principles are quite simple:

- 1 – A mobile App is used to record a simple question and send it to the Raspberry Pi

- 2 – The Raspberry Pi transforms the recorded voice into something understandable by Google’s Speech API and push the result to it

- 3 – Google Speech API returns back its voice-to-text interpretation as a JSON data structure

- 4 – The Raspberry Pi parses the data, builds something out of it and sends back its answer to the mobile App (and eventually to a Home Automation system)

- 5 – The mobile app prints out the answer to the question.

- 6 – Applauses and tears of joy

Proof of concept

First, let’s record a simple question. “Quelle heure est-il ?” (What time is it ?) will be a good start:

Then, let’s send it to the Rapberry Pi:

1 | scp heure.caf root@applepie:/opt/jarvis |

In order to get it interpreted by Google’s Speech API, one as to convert the record from Apple’s CAF (Core Audio Format) to the much more standard FLAC format:

1 2 | apt-get install ffmpeg ffmpeg -i heure.caf heure.flac |

Let’s send it to Google Speech API:

1 2 3 | curl -i -X POST -H "Content-Type:audio/x-flac; rate=44100" -T heure.flac "https: //www.google.com/speech-api/v1/recognize?xjerr=1&client=chromium&lang=fr-FR&maxr esults=10&pfilter=0" |

After a 1 or 2 seconds, I got the answer from Google:

HTTP/1.1 200 OK Content-Type: application/json; charset=utf-8 Content-Disposition: attachment Date: Sun, 10 Feb 2013 22:50:42 GMT Expires: Sun, 10 Feb 2013 22:50:42 GMT Cache-Control: private, max-age=0 X-Content-Type-Options: nosniff X-Frame-Options: SAMEORIGIN X-XSS-Protection: 1; mode=block Server: GSE Transfer-Encoding: chunked {"status":0,"id":"f75093db420033490c2424cdb58de963-1","hypotheses":[{"utterance":"quel heure est il","confidence":0.61982137},{"utterance":"quelle heure est il"},{"utterance":"quel temps fait il"},{"utterance":"quelle heure est-il"},{"utterance":"quel temps va til"}]} |

Not bad 😎

Polishing up

First, let’s write a few lines of PHP on the Rasperry Pi (see previous post for the details of the Nginx/PHP installation):

- to trigger the ffmpeg conversion

- to sent the converted FLAC record to Google’s speech-to-text engine

- to get the JSON data structure back

- to parse the XML result (a few regexps would do)

- to send back a well thought answer to the question

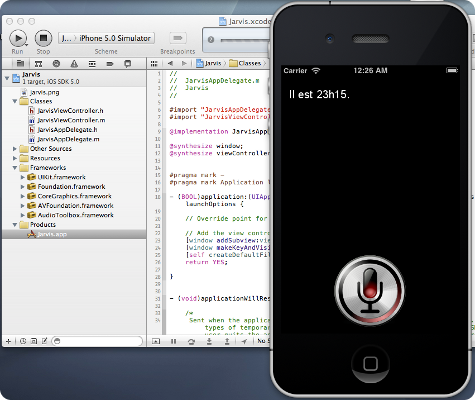

Then, let’s fire up XCode, and with the help of the Core Audio API documentation, let’s write down a few lines of Objective-C:

Pretty cool for a 2-hours work 😎

Now what ?

I guess the proof of concept is conclusive 🙂

Now, the trick is that is not exactly fast. Almost … as slow as Siri.

The exchange with Google is the bottleneck. Also, I’d rather not depend on a private external API. I guess, one of the next step will be to see how would PocketSphinx fit into this project.

The CAF-to-FLAC convertion could also be done on the iOS side of the project. I’ll check out this project later: https://github.com/jhurt/FLACiOS.

Also, Jarvis is litterally speechless. Adding a few “text-to-wav” functionalities shouldn’t be too hard since espeak or festival are already packaged by Raspbian.

Then, of course, I’ll have to put a bit of thought into Jarvis’s brain (text analyzer) and hook the Raspberry Pi to some kind of Home Automation system.

And the iOS part needs a lot of looooove.

But I guess, that’s enough for a first step.

Pingback: Quantum Bits » Project “Jarvis”: step two (speak to me)