This is our fifth part of a series of posts on (a few selected) hidden realities : many-worlds interpretation of quantum mechanics, multiverse linked to the space-time geometries and dynamics, higher dimensional multiverse, holographic multiverse and simulated multiverse.

For the previous posts, please see:

- On hidden realities (part 1) – Many-worlds interpretation of quantum mechanics

- On hidden realities (part 2) – Space-time geometries and dynamics

- On hidden realities (part 3) – Higher dimensional universes

- On hidden realities (part 4) – Holographic principle

Contrary to these previous posts, this one will not deal with contemporaneous physics, but with mathematics and computer science. Keep in mind that the simulated reality hypothesis is less than solid and prone to crackpot theories, though it raises interesting points. This is mostly the occasion to explore mathematical points of view, which is what this post is focused on.

State of science at the turn of last century

In 1894, physicist Albert A. Michelson declared: “… it seems probable that most of the grand underlying principles have been firmly established … An eminent physicist remarked that the future truths of physical science are to be looked for in the sixth place of decimals.” (this quote is often misattributed to William Thomson a.k.a Lord Kelvin).

Encouraged by the prowesses of the industrial revolutions, there was at the time a all-mighty feeling that everything there was to know was known.

Nothing could be more wrong than this… In 1905, Albert Einstein revolutionized the notion of an absolute space and time (ironically, based on the interpretation of an experiment from Michelson and Morley). Later on, Max Planck interpretation of the black body radiation started the quantum revolution. In 1915, Albert Einstein took on Isaac Newton‘s gravitation. Marie Curie‘s experimental lab lead the way to the discoveries which turned out to the finding of fundamental interactions that were totally unknown. These were not just simple shifts in physics. These were paradigm shifts that changed our view of Nature forever.

The same held true for mathematics. And it turned out that mathematics were also on the brink of a revolution.

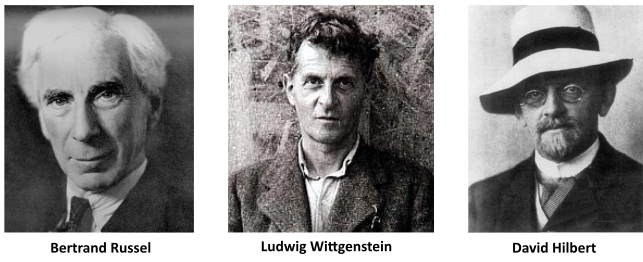

In 1900, mathematician David Hilbert presented at the Paris conference of the International Congress of Mathematicians a list of 10 unsolved mathematical problems (later extended to 23 problems).

He declared:”We must not believe those, who today, with philosophical bearing and deliberative tone, prophesy the fall of culture and accept the ignorabimus. For us there is no ignorabimus, and in my opinion none whatever in natural science. In opposition to the foolish ignorabimus our slogan shall be: Wir müssen wissen — wir werden wissen! (‘We must know — we will know!’)”.

If these problem were to be solved, mathematics would be “complete”. Hilbert’s program proposes to ground all existing theories to a finite, complete set of axioms, and provide a proof that these axioms were consistent. He proposed that the consistency of more complicated systems, such as real analysis, could be proven in terms of simpler systems. Ultimately, the consistency of all of mathematics could be reduced to basic arithmetic.

Some of Hilbert’s problems are still not solved. But one of his problem – the second – turned into unexpected conclusions that would shake the foundations of mathematics themselves. Hilbert’s second problem can be expressed simply: prove that arithmetic is free of any internal contraction.

Just like physicists proved Michelson wrong using one of his own experiment (and revolutionized physics for ever), mathematician’s quest for Hilbert’s program revolutionized mathematics for ever.

Bertrand Russel was a true extraordinary British gentleman. As a writer, historian and philosopher, he was awarded the Nobel prize in literature in 1950. As a political activist, he was a liberal, a socialist and a pacifist. As a mathematician, he had a considerable influence on logic, set theory, cognitive science, artificial intelligence and computer science. Along with Gottlob Frege, G. E. Moore and Ludwig Wittgenstein, he is considered one of the founders of analytic philosophy.

Between 1910 and 1927, he wrote with Alfred North Whitehead a three-volume work on the foundations of mathematics named Principia Mathematica. This work was an attempt to create a logical basis for mathematics – a set of axioms and inference rules in symbolic logic from which all mathematical truths could be proven.

Lugwig Wittgenstein was Russel’s protégé. He published in 1921 the Tractatus Logico-Philosophicus. From a mathematical point of view, this very short (70 pages) but quite austere book had a very broad objective: identifying and clarifying the relation between logic and language. This was of considerable step forward on the road to Russel’s work and to Hilbert’s program.

However, in 1931, Gödel’s two incompleteness theorems changed everything.

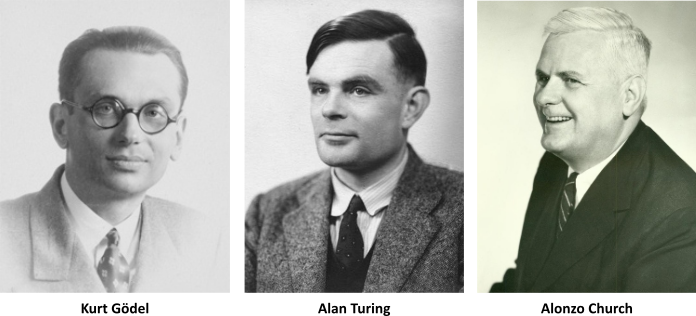

Kurt Gödel is nowadays considered with Aristotle to be one of the most significant logicians in history. His incompleteness theorems establish inherent limitations of all but the most trivial axiomatic systems capable of doing arithmetic. The two results are usually interpreted as showing that Hilbert’s program to find a complete and consistent set of axioms for all mathematics is impossible, giving a negative answer to Hilbert’s second problem.

They definitively proved that Hilbert’s program, and the Principa Mathematica – and in fact any other attempt – could never achieve its goal: for any set of axioms and inference rules proposed to encapsulate mathematics, either the system must be inconsistent, or there must in fact be some truths of mathematics which could not be deduced from them.

Ignorabimus there will be.

The birth of computer science

Gödel’s first incompleteness theorem shows that any consistent formal system (that includes enough of the theory of the natural numbers) is incomplete: there are statements expressible in its language that are unprovable within the system. Such statements are called undecidable.

For these formal system, a complete and consistent finite list of axioms can never be created: each time a new statement is added as an axiom, there are other true statements that still cannot be proved, even with the new axiom !

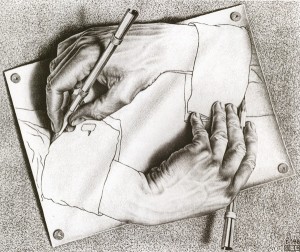

The liar paradox is the sentence “This sentence is false.”. A quick analysis shows that it cannot be true, nor can it be false. If “this sentence is false” is true, then the sentence is false, but then if “this sentence is false” is false, then the sentence is true, and so on… The sentence, in modern terms, is undecidable.

What is interesting here is that the “liar paradox” sentence is a strange loop: a strange loop arises when, by moving only upwards or downwards through a hierarchical system, one finds oneself back to where one started. In the liar paradox, a strange loop arises because the sentence is self-referent: when one applies the sentence “this sentence is false” to the sentence “this sentence is false”, undecidability and strange loop occur.

This is precisely what Gödel’s did to arithmetic. Gödel showed that mathematics and logic contain strange loops: propositions that not only refer to mathematical and logical truths, but also to the symbol systems expressing these truths.

In order to prove his first incompleteness theorem, Gödel created indeed a strange loop: he “arithmetized” arithmetic. Gödel’s numbering is a function that assigns to each symbol or formula of any formal language a unique natural number. A sequence of natural numbers represents then a sequence of symbols. Such a numbering can be interpreted as an encoding in which a number is assigned to each symbol.

To complete his theorem, Gödel chose arithmetic – the mathematics of natural numbers – as a formal language. He applied a “liar paradox sentence”-like reasoning: he cleverly derives an arithmetical proposition G which encodes the statement that G itself is unprovable within the system. Now if G were false, then it would follow (from this encoding) that it was not unprovable: hence it would have to be provable within the system. But then, given the conditions of the encoding, its negation not(G) – which encodes the statement that G is provable – would itself have to be true and hence provable if the system is complete. It follows that if the system is complete, it cannot be consistent, since both G and not(G) would then be provable within it. Hence no system that is complete can also be consistent.

Gödel’s proof is so beautifully simple that is moves me inside.

But even if Gödel has shown that any consistent axiomatic system of arithmetic would inevitably leave some arithmetical truths unprovable, this did not in itself rule out the existence of some “effectively computable” decision procedure which would infallibly, and in a finite time, reveal whether or not any given proposition P was, or was not, provable.

This is where comes Alan Turing, my all-time favorite mathematician : he tackled another of Hilbert’s problems – the 10th problem, also called “Entscheidungsproblem” (the “decision problem”). The Entscheidungsproblem is solved when we know a procedure that allows for any given logical expression to decide by finitely many operations its validity.

In 1936, Alonzo Church (who introduced the notion of algorithm) and Alan Turing published independent papers showing that a general solution to the Entscheidungsproblem is impossible, assuming that the intuitive notion of “effectively calculable” is captured by the functions computable by a Turing machine (or equivalently, by the ones expressible in Alonzo Church’s lambda calculus).

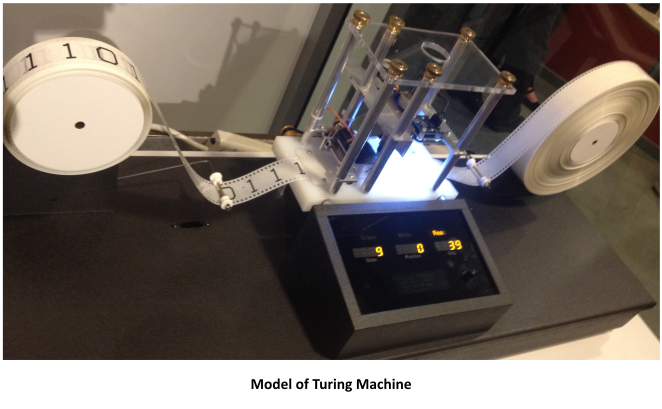

A Turing machine is an idealized device that manipulates symbols on a strip of tape according to a table of rules. They were described for the first time in Alan Turing 1936’s article, “On Computable Numbers, with an Application to the Entscheidungsproblem“, which appeared in the Proceedings of the London Mathematical Society. These Turing machines (though they were called a-machine at the time) consist of a read/write head with a paper tape passing through it. The tape is divided into squares, each square bearing a single symbol – 0 or 1, for example. This tape is the machine’s general purpose storage medium, serving both as the vehicle for input and output and as a working memory for storing the results of intermediate steps of the computation. The device is completed by a state register that stores the state of the Turing machine. Among these is the special start state with which the state register is initialized. A finite table of instructions (what we would now call a program or an algorithm), given the current stat and current symbol read by the head, tells the machine what operation it must perform : erase or write a symbol, move the head right or left, change state or halt.

A Turing machine is an idealized device that manipulates symbols on a strip of tape according to a table of rules. They were described for the first time in Alan Turing 1936’s article, “On Computable Numbers, with an Application to the Entscheidungsproblem“, which appeared in the Proceedings of the London Mathematical Society. These Turing machines (though they were called a-machine at the time) consist of a read/write head with a paper tape passing through it. The tape is divided into squares, each square bearing a single symbol – 0 or 1, for example. This tape is the machine’s general purpose storage medium, serving both as the vehicle for input and output and as a working memory for storing the results of intermediate steps of the computation. The device is completed by a state register that stores the state of the Turing machine. Among these is the special start state with which the state register is initialized. A finite table of instructions (what we would now call a program or an algorithm), given the current stat and current symbol read by the head, tells the machine what operation it must perform : erase or write a symbol, move the head right or left, change state or halt.

Turing machines are not intended as practical computing technologies, but rather as hypothetical devices representing computing machines, for the purpose of building mathematical theories.

A Turing machine is said to terminate in case the program ran by the machine halt no matter what the inputs are. The halting problem is the problem of determining, from a description of an arbitrary program and an input, whether the program will finish running or continue to run forever.

An example of a non-terminating Turing machine program is a program that calculates sequentially each digit of the decimal representation of . A (Turing) computable number is a number that can be written out by a Turing machine: a number that can be computed to within any desired precision by a finite, terminating program. Turing reduced the halting problem for Turing machines to the Entscheidungsproblem.

Based on Turing machines, Turing was able to prove that not every real number is computable. The decimal representations of some real numbers are so completely lacking in pattern that there simply is no finite table of instructions of the sort that can be followed by a Turing machine for calculating the nth digit of the representation, for arbitrary n.

Alan Turing proved that a general algorithm to solve the halting problem for all possible program-input pairs cannot exist. The halting problem is historically important because it was one of the first problems to be proved undecidable in Gödel’s sens. For example, one such consequence of the halting problem’s undecidability is that there cannot be a general algorithm that decides whether a given statement about natural numbers is true or not.

Gödel’s and Turing’s negative answers to Hilbert’s 2nd and 10th problems were huge nails in Hilbert’s program coffin:

- Gödel proved that any sufficient complex formal system always includes undecidable statements

- Turing proved that there are no general algorithm that can decide whether a given statement is provable

Hilbert’s “dream” was shattered. But computer science was born (even though computers and programs themselves can be dated to Charles Babbage or Lady Ada Lovelace)

Simulated reality

A computer simulation is a program, run on a single computer, or a network of computers, to reproduce behavior of a system. The simulated reality hypothesis contends that reality is in fact a simulation (and most likely a computer simulation).

Of course, everybody thinks of the “The Matrix” film saga. But these simulations would be quite different from the ones in these movies. In these movies (although the second and third opus of the Matrix trilogy were so tragically bad, nonsensical and badly acted that I barely call them movies), the universe is simulated but the conscious minds are not : human brains are “interfaced” with a simulated world. In the simulation hypothesis, consciousness is just another figment of the simulation.

Obviously, this hypothesis is very thin. While the simulation argument is an interesting question regarding nature and technology, it leads to several problems. The first being that it is completely unfalsifiable as it is impossible to devise an experiment to test the hypothesis and potentially prove it to be false. The simulated reality is thus no science … and actually prone to many crackpot theories !

Nevertheless, let’s explore the hypothesis, while trying to stay away from those theories:

- Is the statement “We are living in a simulated reality” decidable ?

The answer is no, for the same reason the liar paradox sentence is undecidable or undecidable propositions exist in all (complex enough to at least contain arithmetic) formal systems: strange loop and self-reference. Even if a hypothetical experiment was built to test the hypothesis and turned out negative – that the world was not simulated – it would still be insufficient because there is the potential that this is merely what the simulation wants us to think, or that we are living inside a simulation inside a simulation.

- Would a simulated reality explain why mathematics can describe our world ?

Indeed, our laws of physics are of mathematical nature. Why can our world be so well described by mathematics – or even be intelligibly described at all – is an incredibly good (and difficult) question. It would make a lot of sense if our world was of mathematical nature, like a computer simulation would be. Greek philosopher Plato thought that ideas (mathematics) are abstractions belonging to their ideal realm. One statement of his philosophy is that mathematics are not created but discovered. Indeed, Mandelbrot or Julia fractal sets complexity was somehow more discovered (via computer visualization) than created. But we shall not mistake one thing with its description (mathematical or not). Even if our laws of physics describe Nature by the means of mathematics, it does not mean that Nature is necessary mathematical. Using mathematics to describe the universe might as well be a limitation of our own capacities to describe (or understand) reality. Furthermore, we know that mathematically unprovable but nevertheless existing propositions do exist. And, ironically, the simulated reality is one of them.

- Would a simulated reality explain why we live in a quantum world ?

We are living in a quantum world. Matter and energy are discrete quantities, not continuous ones. Time and space might as well be quantized, as suggested by quantum loop gravity. We introduced Planck areas in a previous post: an area enclosed by a (very) little square which has side length equal to the Planck length. They can be seen as fundamental pixel-like bits of information. Being in a computer simulation would explain these pixel-like bits, wouldn’t it ? Wouldn’t this be signs of the calculus power limit of the computer simulating our reality ? Well, it’s not that simple. There is home for continuous quantities even in quantum theories. Symmetries are good examples, and they are the keys to modern physics. A digital simulation would not easily explain this continuous properties of Nature. Nor it would naturally provide a framework for wave-function collapses. Digitization is not quantization !

- What if we hack the simulation ?

Side channel attacks are forms of cryptoanalysis that attempt to get information about the underlying hardware/logic implementations of a system. A timing attack for example measures the time it takes for various computations to be performed which can provide various kinds of data to clever people. What would append it one hacks the simulation ? Well, technically, this is what we do already. Every scientific experiment is nothing more than running an “algorithm” and every datum collected is nothing more than the information given back by the system, which we attempt to logically put together into a coherent paradigm. Physicists are Hackers.

- Can consciousness be simulated ?

In the simulated reality hypothesis, consciousness is just another part of the simulation, like any others. It implies that the mind or consciousness could be simulated. Is that even possible ? Well, it is hard to say, since nobody actually knows what mind or consciousness really is … Some, like Roger Penrose, argue that while a formal proof system cannot prove its own inconsistency, Gödel unprovable results are provable by human mathematicians. Penrose’s view is that human minds are not describable as formal proof systems. Human ability to escape from self-references would be of non computable nature. He goes on arguing that this capability would be the origin of consciousness and that wave function collapse would be physical basis of this non-computable process. He suggests that microtubules are suitable places where quantum processing could be implemented in the brain. This last hypothesis has yet to be confirmed by observation to get more solid grounds. Nevertheless, Penrose’s reasoning on the non-computable nature of consciouness is rather striking, and would make simulated reality hardly possible (at least if it runs on conventional non quantum-computers and if the human mind is part of the simulation)

- Does all the existence of a simulated reality matters ?

Probably not. Occam’s Razor suggests that, all other things being equal, the hypothesis with fewest assumptions is most likely the correct one. The simulation hypothesis is undecidable and doesn’t bring any new information about our reality (otherwise new information will be a mean to decide whether or not we’re living in a simulation). Its argument rests then solely on numerous assumptions regarding the means and motives of the simulators and the technology that powers them. Occam’s Razor would suggest that simulation is the far more complex hypothesis compared to non-simulation. The simulated hypothesis is thus a matter of belief, not a matter of reasoning. Whether you believe in it or not … the universe won’t change a bit. So the question of its existence is actually rather moot.

Poetic ending

There were many – and perhaps too many – films dealing with multiple universes and simulated reality. Some very bad (I won’t give names, except for Avatar, which, in spite of its colossal budget, doesn’t – for me – even qualifies as a z-movie), some really good, like Exitenz, the first opus of The Matrix, Source Code, Strange days or Avalon. But it would have been to easy to conclude this posts with one of these movies.

In the spirit of this post, let’s introduce a bit of human mind. A bit of subjectiveness.

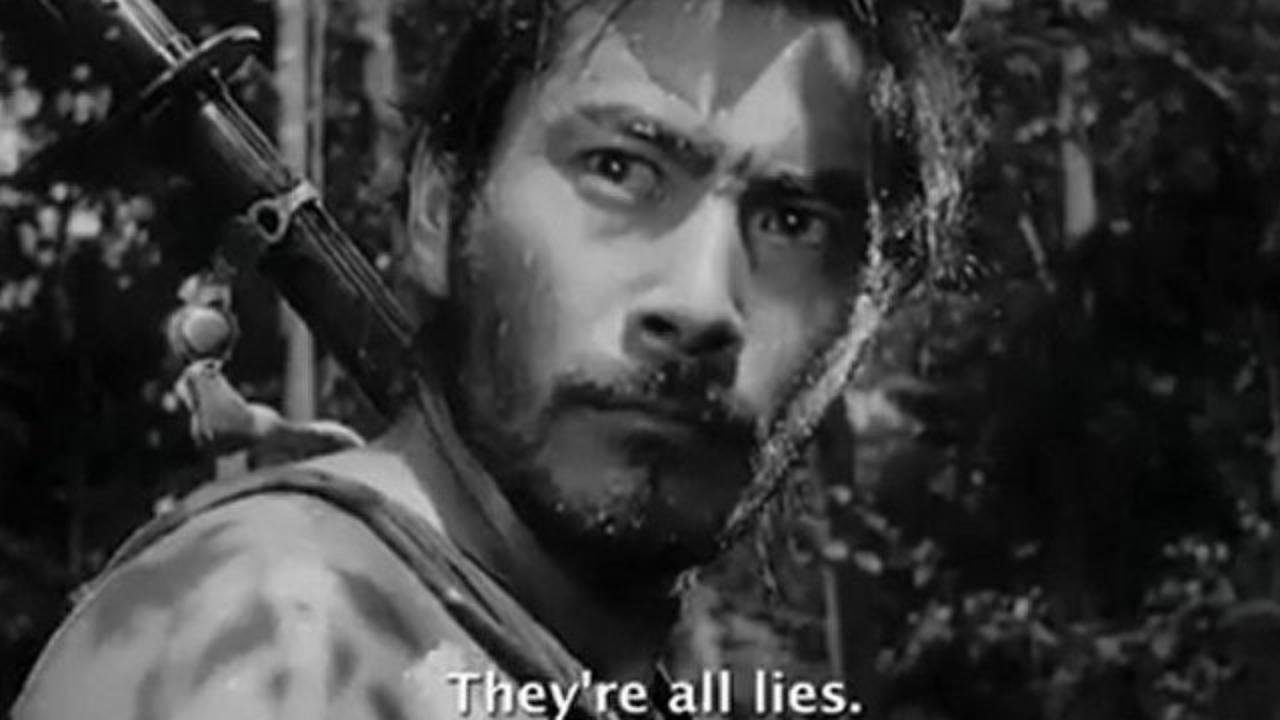

Some films present parallel realities that are actually different contrasting versions of the narrative itself. Commonly this motif is presented as different points of view revolving around a central (and sometimes undecidable) “truth”. The typical example being Akira Kurosawa‘s true masterpiece Rashomon.

Conversely, often in film noir and crime dramas, the alternative narrative is a fiction created by a central character, intentionally — as in The Usual Suspects — or unintentionally — as in Angel Heart.

Less often, the alternative narratives are given equal weight in the story, making them truly alternative universes.